The Nerdiest Possible Way to Make Sourdough

Making sourdough starter with a little help from modern computer vision models.

Sourdough bread is delicious, nutritious, and annoyingly temperamental. It promises the tastiest bread, pancakes, and even donuts that you have ever tasted, but in order to reach that promised land, you have a number of obstacles to overcome, the first being the creation of a healthy Sourdough Starter.

For those who are not currently sourdough bakers, there are at least two different sourdough tutorials on YouTube. I recommend this one. The quick overview is that you add water to bread, and it grows yeast. After a few days this turns into sourdough starter. (People who like giving other people money can also buy sourdough starter). Then, you give this thing more flour and water every time it gets hungry until you are ready to make something bready. Then you put some of the sourdough starter in with the other ingredients for the bready thing, let it sit on your countertop for a few hours, and the new bready thing also gets fluffy. But this isn’t sourdough starter anymore, this is now a Bread Thing, where the difference is that it has more than just flour and water, but also you probably kneaded it at some point. And now it tastes awesome when baked. It’s probably worth noting that there is an alternative to all this for people who don’t like flavor, and that’s packaged yeast or some combination of baking powder and/or baking soda. But I like flavor, so I’m going to focus on the sourdough method.

Some more details on the Sourdough Starter specifically: Sourdough starter, which is the first step of the process mentioned above, is a living creature that humans find tasty. When combined with bread ingredients and allowed to sit out on the counter, it makes thousands of little bubbles which humans think taste good, and makes bakers say things like, “That’s got a good crumb to it.” which doesn’t make any sense, but also doesn’t matter because the bread is delicious.

The key to that sourdough starter, though, is exactly how long you let it rise before giving it more flour and water. If you don’t let your starter rise long enough, your quantity will be too low. If you let your starter rise too long, the quantity will have maxed but they will no longer be alive as they will have ran out of food. You want the largest number of active, healthy yeast possible. The more yeast, the more CO2 bubbles, and CO2 bubbles are what makes your bread nice and light and fluffy. (The delicious taste comes from the yeast poop, but we won’t talk about that here).

So, the key to making delicious sourdough turns out to be using “active” sourdough starter. Once upon a time, folks used to simply stare at the sourdough starter until it stopped moving (between about 10 and 48 hours). This worked really well, but then they invented the internet and now everyone gets distracted making it impossible to know when the sourdough starter is actually done. So, I realized that the only possible way to get good sourdough starter in 2024 was to create an AI to watch it for me. Ideally, I will set this thing up, and then go back to watching cat videos until the exact moment when the sourdough is done, thus optimizing both my sourdough flavor and cat-video-watching time.

Step 1: Labeled Timelapse using Python + ffmpeg

Step 1 was just getting my computer to watch the sourdough for me. This was a bit trickier than I anticipated because, while you can certainly point you phone or webcam at sourdough and click on “timelapse”, it’s actually surprisingly difficult to find a way to, say, control the speed of the video, or to automatically capture how long the video has gone on (so you know how quickly your sourdough rises on average). So, I ended up resorting to python. The first version looked like this:

Use a tiny little python script to capture the image in a nice structured way, once every minute.

Set up ffmpeg to create a movie from the still images at 30 fps

Ask ffmpeg to create an hours/minutes label based on the understanding that the movie is 30fps, and thus each frame is one minute.

Step 2: Automatic Volume Estimation with SAM

So the timelapse above, subjectively, is incredibly awesome. Just being able to take a quick peek at the sourdough video in progress let’s me know know a ton of information, like whether or not I’ve reached the peak, when the peak was (helps for planning), and how much activity is still left in the yeast when I finally getting around to paying attention to the jar in the corner. However, if you know me, you will know that I can sometimes get very distracted and lose all track of time or get deep into a project. I don’t just need something I can look at, I need something that will actively let me know when it’s done.

So, how do we do that? (And relatedly, where’s the promised nerdy ML bit?) Well, as a human looking at the timelapse above, it’s pretty easy to get an intuitive feel for when the sourdough is most active. You can see when the bubbles are forming, and you can get a feeling for when they stop forming. The volume overall increases as well, up to a maximum. After a while, towards the end of the video, you can see the whole structure starts to collapse, meaning that we are well past the peak activity of the yeast, and the yeast are no longer generating CO2 fast enough to combat the pull of gravity on the dough above. Finally, a slight smudge forms near the top of the jar, hinting that the dough is past its peak. So how do I turn that into an alarm that can let me know when it’s complete?

Enter the Segment Anything Model (SAM). Facebook/Meta open sourced the SAM model in April 2023 with the promise that you simply had to prompt it, and it would segment an image into logical pieces. Kind of like an Adobe Photoshop Magic Want select, but a whole lot smarter. So, I thought I’d give that a shot. Originally, I hoped that I could pass it in a prompt like, “dough” and it would do the rest, but it turns out that the only type of “prompting” it accepts are points or boxes. No worries, we can make that work.

Experimenting with the SAM prompting, it is, in fact, quite good, though also definitely not perfect. Passing in a single “prompt” point resulted in a lot of randomness from one frame to the next as bubbles formed and popped. As an example, take a look at what happens when you prompt with four points centered around where the dough first starts out. As you can see in this next video, SAM gets confused by a smudge on the sourdough jar at the beginning, sometimes deciding it is part of the sourdough and sometimes not. Then, later on in the video, a glare spot on the right side of the glass comes in, and this time it decides to stop considering the part of the sourdough under the glare as part of the sourdough. This ends up making it confused about the total volume of sourdough starter. (Something that will become important in the next step).

Step 3: Rolling Averages

Alright, so the first step is to fix the glare squiggles. I did three things, and between them, it nearly eliminated the SAM randomness.

First, I used a square jar in stead of a round one. This condensed the “glare” section down to a small bit on the right and mostly eliminated SAM’s confusion about the subject.

Second, I fixed the lighting, and by fixing the lighting, I mean I put a bit of masking tape over the light in the room I was working to make sure that I never forgot and flipped the light on and off while recording. It does work without this step, but it works better if the lights are completely consistent.

Third, I started calculating the rolling average of growth percent instead of the growth at each level. (Here, growth is defined as the size of the mask identified by the SAM model). I used a rolling average of two hours as, experimentally, that did a good job balancing the randomness of SAM with the growth rate of sourdough. (Sourdough doesn’t grow THAT fast, and waiting a couple of hours to be sure it’s done growing is 100% ok)

Bonus Fourth: This wasn’t really necessary, but I also played around a lot with matplotlib and seaborn to make the video cooler.

Solution 4: Derivatives and Alarms

Alright, so now we have a set of code that knows how large the sourdough is at any given time by using computer vision. Very cool. The only thing left is using that to figure out when the sourdough is *done*.

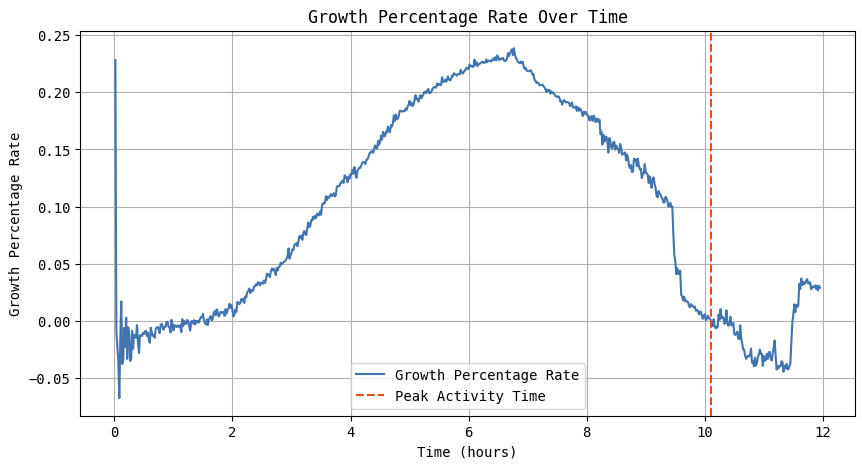

To do that, I mostly took a look at the gradient of the growth percentage in that video above. It’s pretty clear that you start with exponential growth rate, which flies along until around 11 hours or so. After that, we move into a period of slightly decreasing growth until around 17 hours, and after that, growth simply stops. The yeast have eaten all the food they have available to them and are now hanging around on saved energy and/or going dormant. It’s time to get the yeast out of there and into some bread.

How do we turn that intuition into code? I decided to simply use the derivate of the rolling average. To double check that intuition, I graphed the derivative out and found that at exactly the point where I suspected the yeast were out of energy, the derivative turns negative.

Here’s a quick example of the graph from a different run where the yeast were a bit more active and maxed their growth out after 10 hours:

Wrapping Up

The final steps were fairly standard software engineering, just adding all of that together, putting it in a python script, and setting it up to send me an email (with a timelapse video embedded of course) every time that the sourdough activity peaked.

All of the code for the project is freely available on the new py-sourdough github repo. This includes all alarming code as well as all of the graphing and timelapse video creating code. It should be reasonably straightforward for those of you coding python on Windows and WSL2, but will likely take more changes for folks on other platforms.

Happy Sourdoughing!